The demand for content across all kinds of sectors—news and podcasts, entertainment and gaming, advertising and e-learning—is driving an exploding creator economy. Those creators need voice talent—voice-overs that can be created quickly, revised collaboratively, and delivered at scale.

WellSaid Labs is helping to deliver what they need by leveraging AI and generative neural networks to provide high-quality synthetic voices that match human-delivered pacing, cadences, and intonations and deliver text-to-sound that sounds nearly indistinguishable from human speech.

At Qualcomm Ventures, we’re excited to announce our recent investment in WellSaid Labs. We believe the company has created an industry-leading product that will open up exciting new applications for voice including e-learning, advertising, and news.

WellSaid’s technology delivers synthetic voices that don’t sound like robots. Instead, they’re lifelike synthetic voices built from the voices of real people. “We’re not just modeling the sounds of someone’s voice,” explained Matt Hocking, CEO and co-founder of WellSaid Labs. “We’re modeling the pitch, the pausing, the intonation, the inflection.”

The human likeness that WellSaid can produce is superior to any other startup in the space that we’ve seen. In fact, in recent third-party testing, a WellSaid-generated voice earned a mean opinion score of 4.82 for its naturalness of speech, out of a possible 5. A human voice actor put through the same test also scored 4.82.

Not only are we impressed by the naturalness of speech produced, but we also see the opportunities generated by WellSaid’s ease of use and its length of effective audio. While other services can produce human-sounding speech for five- or ten-second clips, WellSaid can produce outstanding audio for long-form content.

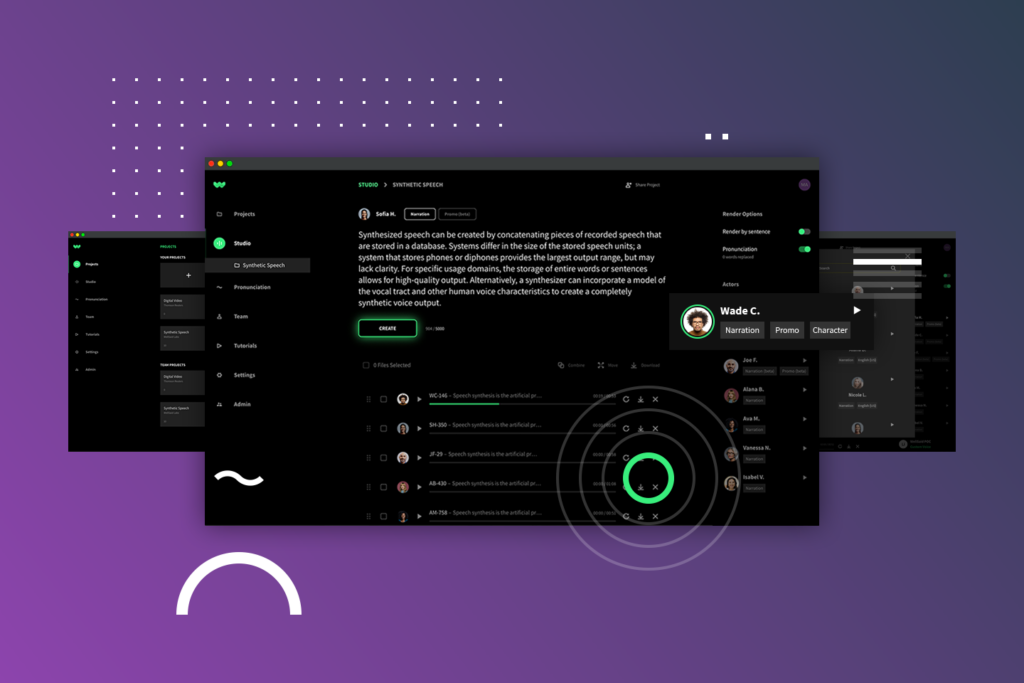

WellSaid’s goal is to take this technology and put it in the hands of content creators who can use it. Until now, high-quality voiceovers could not be created on-demand and at scale in a traditional workflow.

Hocking understands those needs: His background is in creative and product at agencies and startups. WellSaid’s CTO and co-founder, Michael Petrochuk, is a software engineer and deep learning researcher. They developed WellSaid at Paul Allen’s Artificial Intelligence Institute in 2018.

In the past, text-to-speech solutions would record someone speaking, cut that recording into syllables, and then stitch the needed sounds back together, creating words artificially. WellSaid instead uses someone’s recorded speech to train a deep learning model that can then speak any word imaginable like that speaker.

WellSaid has trained a large number of voices to build into its AI. That allows customers to find the right voice for their project and create a sonic identity for their brand, equivalent to its visual identity. Because the AI creates the audio, the voices are available to content creators at any hour of the day or night, can be quickly edited and revised as launch dates or product names are adjusted, and distributed teams can easily create a consistent brand voice, which translates into more efficiency and greater speed.

The initial customer focus has been on helping corporate content creators make better, more engaging training materials cost-effectively. Those clients are now looking for more public-facing applications, like in media and entertainment. There are also opportunities in Edge applications and smart devices, as brands look for ways to engage with their customers beyond the screen.

We also see WellSaid Labs’ easy to use technology moving to IoT and automotive, especially as 5G is deployed, bandwidth grows, and latency decreases.

And taking a broader perspective, the cutting-edge capabilities of WellSaid’s text-to-speech technology fits well into our investment strategy of nurturing the startups that are enabling the next generation of AI.